Machine Learning Simplified

Created on 11 December, 2025 • Tech Blog • 86 views • 10 minutes read

Machine learning explained simply: Supervised, unsupervised, and reinforcement learning, deep networks, and real-world applications.

Machine Learning Simplified

Table of Contents

- Defining the Core Concept: Beyond Explicit Programming

- The Foundational Workflow: The ML Pipeline

- Data Ingestion and Feature Engineering

- Model Training and Optimization

- The Three Pillars of Learning: A Comprehensive Taxonomy

- Deep Learning: The Evolution of Neural Networks

- Real-World Impact and Applications

- Challenges, Ethics, and The Responsibility of AI

- Conclusion: Empowering the Future with Data

Defining the Core Concept: Beyond Explicit Programming

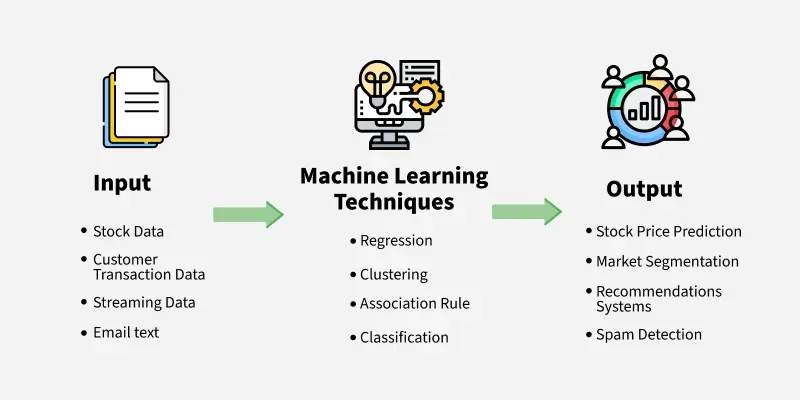

Machine Learning (ML) stands as the cornerstone of modern Artificial Intelligence (AI), representing a paradigm shift in how computer systems are developed. Unlike traditional programming, where engineers explicitly write every rule and logical step the computer must follow to solve a problem, Machine Learning empowers systems to learn these rules autonomously from data. It operates on the principle that a machine, when exposed to a sufficient volume of examples, can identify, generalize, and apply underlying patterns to make accurate predictions or decisions on new, unseen data. Arthur Samuel, a pioneer in the field, defined it simply as the ability for computers to learn without being explicitly programmed, a definition that remains fundamentally true today. [attachment_0](attachment)

The essence of ML lies in its ability to generalize knowledge. Instead of inputting thousands of lines of code to define every characteristic of a fraudulent transaction, an ML algorithm is fed millions of transaction records, labeled as either "fraud" or "legitimate." The algorithm, through sophisticated mathematical logic, iteratively adjusts its internal weights and parameters until it can reliably map the input features (location, amount, time) to the correct output label (fraud/legitimate). The final output of this process is the **model**, a mathematical function capable of performing this mapping on any new transaction. This capability to derive complex, non-linear relationships is what makes ML so powerful across diverse fields, providing the backbone for everything from sophisticated forecasting models to autonomous vehicles and generative AI tools like Large Language Models (LLMs).

The Foundational Workflow: The ML Pipeline

Successful deployment of a Machine Learning system is not solely dependent on the algorithm; it is a rigorous, systematic process known as the ML workflow or pipeline. This process ensures the model is developed, validated, and maintained correctly across its entire lifecycle, transforming a raw business problem into a functioning autonomous solution.

Data Ingestion and Feature Engineering

The foundation of any machine learning project is **data**, which often consumes the majority of a project's time and resources. Raw data is rarely clean; it is typically messy, containing missing values, errors, inconsistencies, and irrelevant information. The initial steps involve **Data Collection**, gathering the necessary information from databases, sensors, or external APIs, followed by intensive **Data Preprocessing**. This preprocessing phase includes cleaning the data, handling outliers, and normalizing or standardizing numerical values to ensure the algorithm is not misled by vastly different scales.

Crucially, this phase includes **Feature Engineering**. Features are the individual, measurable input variables that the model uses to make a prediction. For a house price prediction model, features include square footage, neighborhood, and number of bedrooms. Feature Engineering is the art of manipulating raw data to create new, more informative features that better expose the underlying patterns to the algorithm. For example, converting a raw date into a feature that indicates the "number of days until a holiday" might be far more informative for a sales forecasting model. High-quality features are paramount, as the machine can only learn the patterns that are actually present within the features it is given.

Model Training and Optimization

Once the data is clean and the features are engineered, it is typically split into three sets: the **Training Set** (used to teach the model), the **Validation Set** (used for fine-tuning the model's structure), and the **Test Set** (used once for a final, unbiased evaluation). The chosen algorithm is then instantiated and trained using the Training Set. During training, the algorithm iteratively minimizes an **error function** (or loss function) by adjusting its internal weights. This repetitive process teaches the model the relationship between the input features and the target labels.

A critical step following initial training is **Hyperparameter Tuning**. Hyperparameters are the configuration settings of the algorithm itself—such as the learning rate, the number of layers in a neural network, or the maximum depth of a decision tree—which are not learned from the data. Techniques like Grid Search or Bayesian Optimization are employed to systematically test different combinations of these hyperparameters against the Validation Set, aiming to maximize performance while avoiding two common pitfalls. The first pitfall is **underfitting**, where the model is too simple and fails to capture the complexity of the data. The second is **overfitting**, where the model learns the training data too well, including the noise and errors, and consequently performs poorly on new, unseen data.

The Three Pillars of Learning: A Comprehensive Taxonomy

Machine Learning problems are typically categorized by the nature of the data provided and the specific objective of the learning process.

Supervised Learning: Classification and Regression

Supervised learning is defined by the use of **labeled data**, where every input example is paired with the desired correct output. The machine acts as a student, learning under the direct guidance of these labels. The learning objective is to accurately map a given input to a known output, and it constitutes the majority of current real-world ML applications.

This category is split into two primary types of tasks. **Classification** models predict a discrete category or class, such as determining if an image contains a dog or a cat, flagging an insurance claim as fraudulent or legitimate, or classifying a customer review as positive, negative, or neutral sentiment. **Regression** models, in contrast, predict a continuous numerical value, such as forecasting tomorrow’s stock price, estimating the amount of rainfall, or predicting the required maintenance time for a piece of industrial machinery. Algorithms frequently used in this space include Linear Regression, Logistic Regression, Support Vector Machines (SVMs), and complex neural networks.

Unsupervised Learning: Clustering and Dimensionality Reduction

Unsupervised learning deals with **unlabeled data**; the algorithm is provided with inputs but no corresponding target outputs or answers. The machine acts as an explorer, autonomously seeking out hidden structures, patterns, and intrinsic similarities within the data. The goal is primarily descriptive, aimed at uncovering new insights rather than making specific predictions.

The main techniques here are **Clustering**, which automatically groups similar data points together. For instance, a retailer might use K-means clustering to segment its customer base into distinct groups—such as "high-value shoppers" or "discount seekers"—based purely on purchasing history, enabling highly targeted marketing campaigns. Another key technique is **Dimensionality Reduction**, used to simplify datasets with hundreds or thousands of features by projecting the data onto a lower-dimensional space while preserving its most important characteristics. This is vital for data visualization and for speeding up the training time of other algorithms.

Reinforcement Learning: Agents, Environments, and Rewards

Reinforcement Learning (RL) is a complex and powerful form of machine learning focused on sequential decision-making. It models learning as an iterative interaction between an **agent** and its **environment**. Unlike supervised learning, there are no static labels; instead, the agent receives a **reward** (positive reinforcement) or a **penalty** (negative reinforcement) after performing an action.

The objective is for the agent to learn the optimal **policy**—a strategy mapping states to actions—that maximizes the cumulative reward over the long term. This trial-and-error process is particularly well-suited for dynamic environments where the outcome of an action is uncertain and depends on previous actions. RL drives AI systems that learn to play complex games like Chess or Go at superhuman levels, controls sophisticated industrial robotic arms, and is essential in training autonomous vehicles to navigate and make real-time decisions in unpredictable traffic scenarios.

Deep Learning: The Evolution of Neural Networks

Deep Learning (DL) is a subset of Machine Learning that uses **Artificial Neural Networks (ANNs)** with multiple layers (hence "deep"). Inspired by the structure and function of the human brain, these networks are capable of automatically learning highly complex features from raw data, eliminating the need for manual Feature Engineering in many domains.

Convolutional Neural Networks (CNNs) for Vision and Transformers for Language

The evolution of deep learning is marked by specialized architectures tailored for specific data types. **Convolutional Neural Networks (CNNs)** revolutionized **Computer Vision**. They employ specialized layers that scan input data, such as images, using filters to automatically identify spatial hierarchies of features, starting from simple edges and textures in the first layers to complex shapes and objects in the deeper layers. CNNs are the backbone of facial recognition, medical image analysis, and self-driving car perception systems.

For processing sequential data like text, speech, and time series, **Recurrent Neural Networks (RNNs)** were dominant for years, but they struggled with long-term dependencies. This challenge was largely solved by the **Transformer** architecture, introduced in 2017. Transformers leverage a mechanism called **Self-Attention**, which allows the model to weigh the importance of different parts of the input sequence relative to each other, regardless of their distance. This breakthrough enabled the creation of large, context-aware models like BERT, GPT, and the underlying architectures for all modern **Large Language Models (LLMs)** and generative AI, which now excel at translation, summarization, and generating highly coherent human-like text.

Real-World Impact and Applications

The applications of Machine Learning have integrated deeply into the fabric of modern commerce and daily life, creating efficiencies and entirely new capabilities across sectors.

In **Finance**, ML is vital for **fraud detection**, where models analyze billions of transactions in milliseconds to flag anomalous activity. They are also used extensively in **algorithmic trading**, making high-speed buy and sell decisions based on complex market indicators, and in **credit scoring** to accurately assess risk profiles. In **Healthcare**, ML models analyze patient data, genetic information, and medical images to assist doctors in diagnostics, such as identifying cancerous lesions from radiology scans or predicting patient risk for certain diseases based on genomic markers, leading to personalized medicine.

**Autonomous Systems**, including self-driving cars, drone delivery systems, and robotic assembly lines, rely on a blend of supervised learning for object classification, unsupervised learning for environment mapping, and reinforcement learning for real-time path planning and decision-making. Finally, in **E-commerce**, ML powers the personalized **recommendation engines** that suggest products to users, optimizes supply chain logistics, and provides automated customer service through advanced chatbots.

Challenges, Ethics, and The Responsibility of AI

As ML models become more sophisticated and embedded in critical decision-making systems, several urgent ethical and technical challenges must be addressed to ensure responsible deployment.

The most pressing ethical concern is **Bias and Fairness**. Machine learning algorithms learn from the data they are given. If the training data reflects historical or societal prejudices—such as biased hiring records or disproportionate policing data—the model will not only learn but often amplify these biases, leading to discriminatory outcomes in areas like loan approvals, hiring, or criminal justice risk assessment. Mitigating this requires rigorous auditing of datasets for representative diversity and employing fairness-aware ML techniques.

Another major challenge is the **"Black Box" Problem** and the need for **Explainability (XAI)**. Deep learning models often achieve high accuracy but at the cost of transparency; it is difficult for humans to understand exactly why the model arrived at a particular decision. This lack of transparency is unacceptable in high-stakes fields like healthcare or justice, where understanding the reasoning (accountability) is paramount. Researchers are working to develop techniques that provide interpretable results, connecting cause and effect, or to make the algorithms themselves more inherently explainable.

Finally, **Privacy and Data Security** are paramount. The immense hunger of ML for data, particularly sensitive personal information, necessitates strict data handling protocols, robust anonymization techniques, and clear frameworks for obtaining informed consent, aligning with regulations like GDPR. Ensuring human oversight and accountability for autonomous decisions is crucial to maintaining ethical alignment with human values and law.

Conclusion: Empowering the Future with Data

Machine Learning has fundamentally transitioned from a theoretical discipline to the defining technology of the digital age. By enabling computers to learn inductively from data rather than deductively from explicit rules, ML has unlocked solutions to problems previously thought intractable, leading to breakthroughs in personalized medicine, global logistics optimization, and sophisticated autonomous systems. The journey forward focuses on scaling these models to be more robust, more general, and—critically—more ethical. As the quantity and quality of data continue to grow, ML will continue to empower human capabilities, automate complex processes, and drive innovation across every sector of the global economy, solidifying its role as the engine of the Fourth Industrial Revolution.

Further Reading and Resources

Explore these resources for a deeper understanding of Machine Learning principles and applications:

- What is Machine Learning? Types and uses (Google Cloud)

- Supervised vs. Unsupervised Learning: What's the Difference? (IBM)

- Ethical Considerations in AI & Machine Learning (Intelegain Technologies)

- Machine Learning Workflow Overview (MLQ.ai)

- What are Transformers in Artificial Intelligence? (AWS)

Popular posts

-

Random number generatorGenerator tools • 171 views

-

Emojis removerText tools • 168 views

-

Lorem Ipsum generatorGenerator tools • 165 views

-

Reverse lettersText tools • 159 views

-

Old English text generatorText tools • 158 views