How AI Detects Cyber Threats

Created on 17 January, 2026 • Tech Blog • 12 views • 13 minutes read

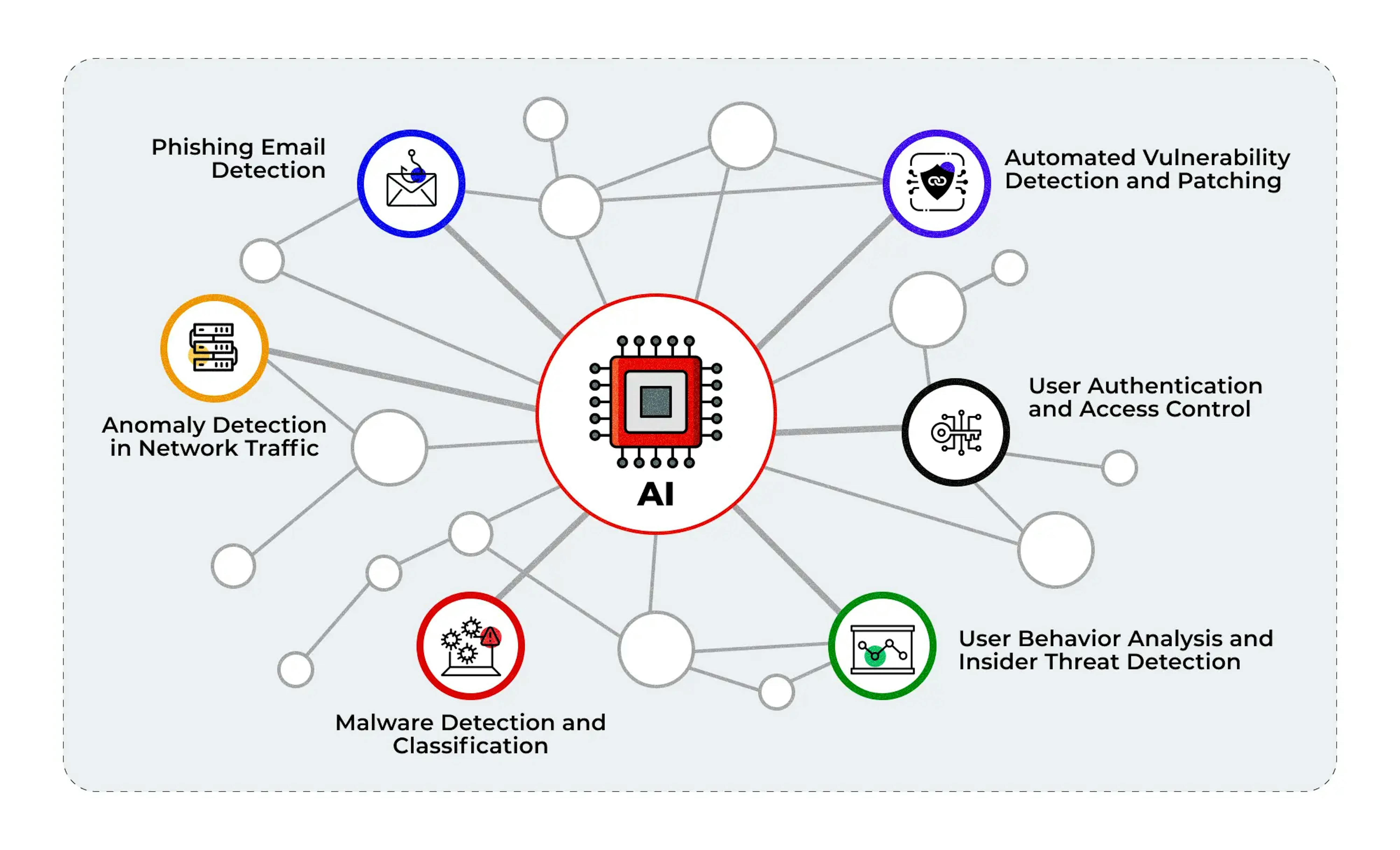

How AI detects cyber threats in 2026: Discover the power of behavioral fingerprinting, NLP phishing defense, autonomous threat hunting, and deep learning anomaly recognition.

Silicon Sentinels: How AI Detects and Neutralizes Cyber Threats in 2026

Table of Contents

The Shift from Static Signatures to Dynamic Intelligence

Behavioral Fingerprinting: The New Standard in Detection

Deep Learning and the Architecture of Anomaly Recognition

Natural Language Processing in the War Against AI Phishing

Autonomous Network Traffic Analysis and Real-Time Containment

Adversarial AI: Defending the Defenders

The Agentic Revolution: Autonomous Threat Hunting

Securing the Edge and the Hybrid Cloud Ecosystem

The Ethics of Automated Defense and Algorithmic Bias

Conclusion: The Convergence of Human and Machine Insight

The Shift from Static Signatures to Dynamic Intelligence

As we navigate the digital landscape of 2026, the traditional methods of cybersecurity have undergone a radical transformation. For decades, the industry relied on signature-based detection, a method that functioned much like a biological immune system identifying a known virus. If the "fingerprint" of a piece of malware matched a record in a global database, it was blocked. However, the rise of AI-driven cyberattacks has rendered this approach obsolete. Today’s threats are non-linear, adaptive, and capable of mutating their own code to avoid recognition. In response, modern cybersecurity has shifted toward dynamic intelligence, where the goal is no longer to recognize a known file, but to understand the fundamental intent behind every action in a network. This transition marks the birth of the "Silicon Sentinel," an AI-driven layer of defense that operates at speeds and scales far beyond human capability.

The core of this evolution lies in the move from "detect and alert" to "predict and prevent." In 2026, organizations no longer wait for a breach to happen before they react. AI systems analyze vast quantities of data from emails, network logs, and user activity to recognize the "weak signals" of an intrusion long before a payload is delivered. This proactive stance is necessary because modern attackers use AI to automate their reconnaissance and scale their campaigns with near-perfect realism. By embedding AI into the heart of security operations, we have created an environment where the defense can evolve at the same rate as the attack, creating a constant state of equilibrium in the global cyber arms race.

Behavioral Fingerprinting: The New Standard in Detection

One of the most significant breakthroughs in 2026 is the perfection of behavioral fingerprinting. Traditional security models focused on what a user "knows" or "has," but AI focuses on what a user "does." Every individual in a network—human or machine—has a unique behavioral profile, composed of thousands of micro-actions such as typing speed, typical login times, file access sequences, and mouse movement patterns. AI-driven behavioral analytics tools establish a baseline of "normal" behavior for every entity in an organization. When a deviation occurs—such as a user accessing a sensitive database at an unusual hour or a process initiating a sudden, large-scale data transfer—the system identifies the anomaly in milliseconds. This approach is highly effective at stopping "insider threats" and credential theft, as it focuses on the deviation from identity rather than the validity of the login credentials.

This level of visibility is particularly crucial as we see the rise of "living off the land" attacks, where hackers use legitimate administrative tools to conduct their activities. These attacks often bypass traditional scanners because the tools themselves are not malicious. However, AI can detect the subtle misuse of these tools by correlating seemingly unrelated events into a broader picture of an attack. In 2026, behavior-focused defense has become the standard for handling adaptive malware that does not behave the same way twice. By focusing on the "how" rather than the "what," AI provides a level of depth that makes it increasingly difficult for adversaries to remain hidden within a compromised environment.

Deep Learning and the Architecture of Anomaly Recognition

The underlying engine of modern threat detection is the deep neural network. In 2026, these models are trained on petabytes of historical attack data and real-time threat intelligence feeds. These architectures allow the AI to recognize high-dimensional correlations that a human analyst would easily miss. For example, a multi-stage attack might begin with a subtle privilege escalation, followed by a minor configuration change in a cloud account, and conclude with a slow, low-noise exfiltration of data. Each of these events, taken in isolation, might appear harmless. However, deep learning models can connect these dots instantly, identifying the underlying pattern of a coordinated campaign. This ability to "see the forest through the trees" is what gives AI a decisive advantage in the 2026 security stack.

Furthermore, these models utilize unsupervised learning to identify "Zero-Day" threats—vulnerabilities that have never been seen before. Because the AI is trained to understand the fundamental logic of safe software behavior, it can flag any code that violates these principles, even if the code has no known signature. This has drastically reduced the "dwell time" of attackers, which is the period between an intrusion and its discovery. In 2026, the best Security Operations Centers (SOCs) value context and speed over the sheer volume of alerts, using AI to filter out the noise of false positives and focus the attention of human experts on the most critical, high-risk incidents. The result is a more resilient and efficient defensive posture that scales with the complexity of the enterprise.

Natural Language Processing in the War Against AI Phishing

Phishing remains the primary entry point for cyberattacks in 2026, but the nature of the threat has changed. Attackers now use Large Language Models (LLMs) to generate highly personalized and linguistically perfect spear-phishing emails that are indistinguishable from legitimate communications. Traditional spam filters and keyword-based scanners are powerless against these attacks. To counter this, organizations have deployed language-aware detection systems powered by Natural Language Processing (NLP). These systems do not just look for malicious links; they analyze the tone, word patterns, and intent of every message. By comparing a new email against the historical communication style of the supposed sender, the AI can detect the subtle "uncanny valley" of a synthetic message, flagging it as a potential deepfake or phishing attempt.

This linguistic analysis extends beyond email to messaging platforms and voice communications. In the "Deepfake Era" of 2026, AI-based detection systems analyze metadata, visual inconsistencies in video calls, and speech patterns to confirm the authenticity of a digital identity. This is a critical defense against Business Email Compromise (BEC) and social engineering attacks that target the human element of security. By acting as a "Language Firewall," NLP-based AI provides a layer of protection that keeps pace with the increasing sophistication of generative AI attacks, ensuring that trust—the most valuable currency in any organization—remains intact in an increasingly synthetic world.

Autonomous Network Traffic Analysis and Real-Time Containment

The speed of modern cyberattacks means that human-in-the-loop response is often too slow to prevent damage. In 2026, the standard for network defense is Autonomous Network Traffic Analysis (NTA). These systems use machine learning to discern patterns and anomalies in vast volumes of network data in real-time. By monitoring every packet and every connection, the AI can detect the tell-tale signs of lateral movement or command-and-control communication as it happens. More importantly, these systems are empowered to take immediate action. If a node is identified as compromised, the AI can autonomously isolate that segment of the network, revoke access credentials, and stop malicious processes before the attacker can even realize they have been detected.

This level of "Automated Containment" has reduced the impact of ransomware and other destructive attacks by orders of magnitude. In 2026, a network is no longer a static castle with a moat; it is a self-healing ecosystem. When the AI detects a threat, it doesn't just block it; it also initiates forensic-level artifact collection, capturing the state of memory and disk before the evidence can be destroyed or modified. This automated forensics allows security teams to conduct faster post-incident reviews and understand the root cause of an attack without the manual labor of data gathering. The autonomy of the 2026 network ensures that even if an attacker manages to find a gap, their ability to exploit it is limited to a matter of seconds.

Adversarial AI: Defending the Defenders

A new frontier in 2026 is the protection of the AI models themselves. As defenders rely more on AI, attackers have turned their attention to "Adversarial AI"—attacks designed to poison training data, evade model detection, or steal the model’s logic. This has led to the development of AI Security Posture Management (ASPM), which continuously monitors the integrity of the organization’s defensive algorithms. These systems ensure that the AI is not being "fooled" by carefully crafted inputs that mimic normal behavior while carrying a hidden malicious intent. Defending the defenders has become a vital part of the cybersecurity mission, as a compromised AI model could potentially become the ultimate "backdoor" into an enterprise.

This cat-and-mouse game has forced a shift toward "Robust Learning" techniques, where AI models are trained on adversarial examples to build resilience against evasion. In 2026, the most advanced security platforms are those that can detect when they are being targeted by an adversarial attack. This requires a deep understanding of the mathematical foundations of the models and a constant vigilance against "Data Poisoning" campaigns. The battle for the integrity of the machine's mind is the primary concern for the researchers and engineers of 2026, making AI security inseparable from the overall security of the business itself. We are no longer just securing code; we are securing the very process of learning.

The Agentic Revolution: Autonomous Threat Hunting

The most transformative shift in 2026 is the evolution of AI from passive tools into active, autonomous members of the security team—a movement known as the Agentic AI revolution. Unlike traditional automation, which follows a rigid script, Agentic AI systems operate based on goals and context. They can perform "Autonomous Threat Hunting," proactively scouring the network for hidden vulnerabilities, misconfigurations, and silent-persistent threats that have not yet triggered an alert. These agents can simulate millions of attack paths, identifying where an organization is most likely to be targeted next and suggesting preemptive hardening measures. For SOC teams, these agents handle the burden of tier-one triage, enrichment, and containment, allowing human analysts to focus on high-level strategy and judgment.

The impact of agentic systems is most visible in the reduction of Mean Time to Respond (MTTR). In mature security teams, agentic AI has cut response times by 30 to 50 percent compared to the pre-agentic era. These agents also maintain immutable audit trails of every action they take, generating regulator-ready incident summaries and reducing the compliance burden on the organization. In 2026, the workforce is a hybrid of human expertise and robotic speed. The human remains the orchestrator of policy and the arbiter of ethics, while the AI agent is the tireless soldier on the digital front lines. This partnership is the cornerstone of cyber resilience in the mid-2020s, providing a level of defense that is as intelligent as it is relentless.

Securing the Edge and the Hybrid Cloud Ecosystem

As organizations continue to push their operations into the cloud and onto the edge, the digital attack surface has exploded in complexity. In 2026, visibility is the greatest challenge, as a single hidden gap in a multi-cloud environment can become the entry point for an autonomous compromise chain. AI has become the essential tool for managing this visibility, ingesting data from OT (Operational Technology), IoT (Internet of Things), and edge assets and correlating it with IT signals. By learning the "normal" data flows between these diverse environments, AI can detect the subtle anomalies that signify an intrusion, even when the activity occurs in an unmonitored corner of the cloud. This "Universal Visibility" is what allows for the enforcement of a true Zero-Trust architecture.

Furthermore, AI is being used to automate "Continuous Exposure Management," which has replaced the traditional annual security scan. In 2026, an organization’s security posture is assessed every minute. AI agents crawl through CI/CD pipelines and cloud build systems to identify misconfigurations and drifts in real-time. This proactive approach ensures that the environment is "Secure by Design" and remains so as it scales. By providing a single, unified view of the entire digital estate, AI allows security teams to manage the complexity of the hybrid cloud without being overwhelmed by the sheer volume of data. In 2026, the edge is no longer a vulnerability; it is a protected frontier of the intelligent enterprise.

The Ethics of Automated Defense and Algorithmic Bias

As we grant AI the power to autonomously manage our defense, the ethical implications of these decisions have moved to the center of the global conversation. The primary risk in 2026 is the potential for algorithmic bias, where a detection model might unfairly target certain users or behaviors based on flawed training data. If an AI system identifies a pattern as "malicious" purely because it is unusual, it can lead to the isolation of legitimate users or the disruption of critical business processes. This has forced the industry to adopt "Responsible AI" frameworks, where every automated decision must be auditable, transparent, and grounded in clear policy guardrails. We are learning that the machine’s efficiency must always be balanced with human fairness and common sense.

Moreover, the use of AI in cybersecurity has created a new kind of "Sovereignty Dilemma." As organizations increasingly rely on third-party AI providers for their defense, questions arise about who owns the threat data and the learning models. In 2026, the focus has shifted toward "Digital Sovereignty," with nations and corporations building their own localized AI models to ensure that their defensive intelligence remains under their direct control. Establishing the boundaries of what a machine can and cannot do—especially when it comes to the "Manipulation of Judgment"—is the primary ethical challenge of our time. By ensuring that human expertise remains at the top of the decision-making pyramid, we can enjoy the benefits of AI automation while protecting the fundamental rights of the individuals within the network.

Conclusion: The Convergence of Human and Machine Insight

In conclusion, the state of cyber threat detection in 2026 is defined by the convergence of human judgment and machine speed. We have moved from a reactive, signature-based world into a proactive, intelligent, and autonomous defensive reality. AI has provided the tools to navigate the complexity of the modern attack surface, allowing us to recognize patterns, analyze language, and contain threats with a precision that was previously unimaginable. From behavioral fingerprinting to the agentic revolution, the innovations of the past few years have turned the tide of the cyber arms race, giving the defenders a decisive advantage in the battle for digital integrity.

However, the success of this new era depends not just on the quality of our algorithms, but on the wisdom of our orchestration. As we look beyond 2026, the goal is to build a "Human-Centric Cyber Future," where technology serves as a force multiplier for human intent. We must continue to invest in the education and training of our human firewalls, ensuring that the professionals of the future are as fluent in AI logic as they are in strategic risk management. The future of cybersecurity is not a choice between humans or machines; it is the seamless integration of both. By designing a system that is secure, equitable, and profoundly intelligent, we can protect the foundations of our digital society and build a future where the impossible is just another threat that we have already predicted. The era of the Silicon Sentinel is here, and it is our responsibility to lead it.

References

SentinelOne Cyber Intelligence: sentinelone.com

NetWitness Threat Research: netwitness.com

Darktrace AI Lab: darktrace.com

World Economic Forum Global Cybersecurity Outlook: weforum.org

KnowBe4 Agentic AI Report: knowbe4.com

Popular posts

-

Random number generatorGenerator tools • 171 views

-

Emojis removerText tools • 168 views

-

Lorem Ipsum generatorGenerator tools • 165 views

-

Reverse lettersText tools • 159 views

-

Old English text generatorText tools • 158 views